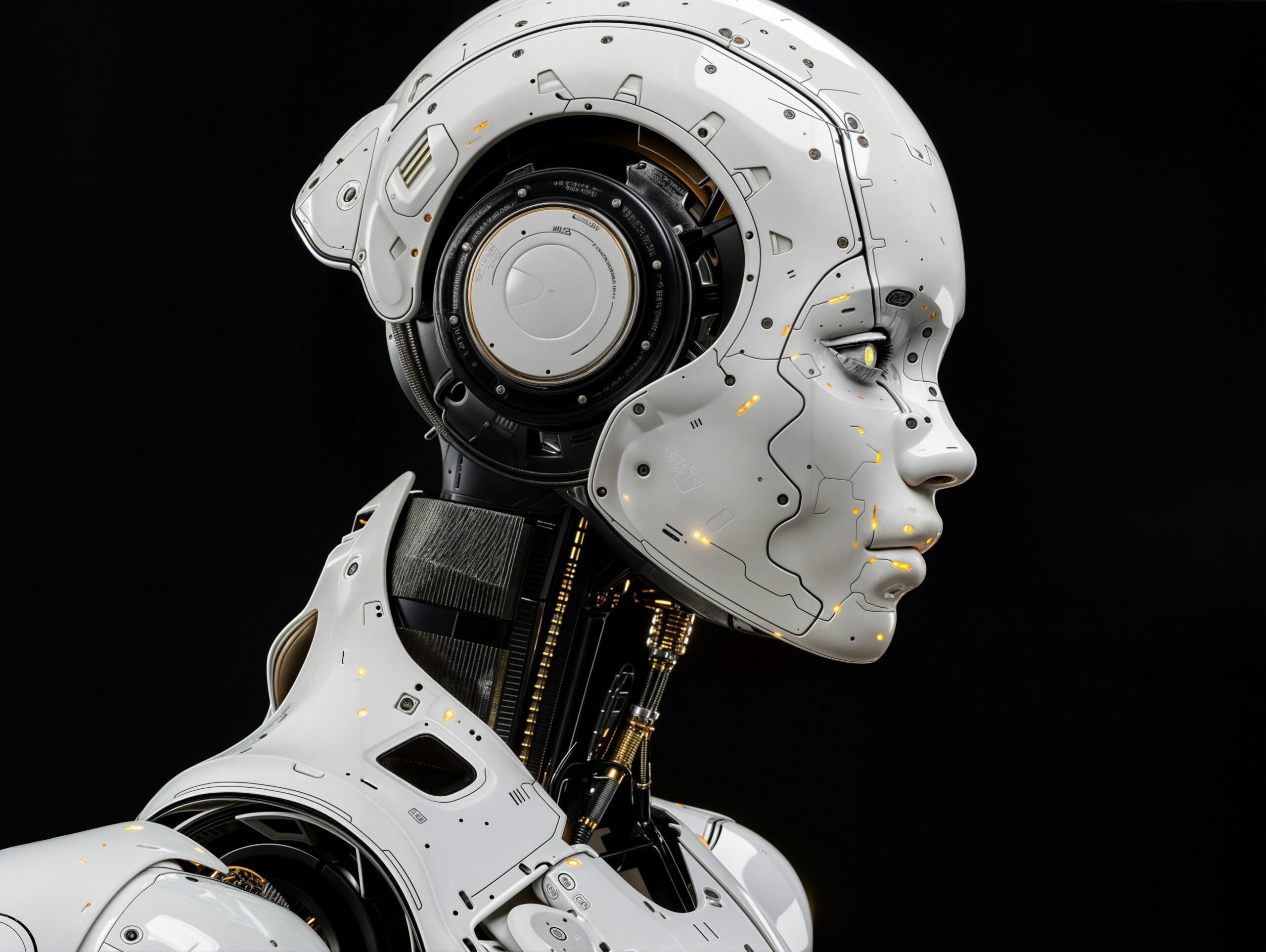

Advanced machine learning techniques are pervasive and being integrated in many fields of society, having a broad impact on society and the economy. Three world class experts summarized the way they anticipate the future of machine learning during a panel session organized by GESDA at the Applied Machine Learning Days EPFL 2021 conference.

The astonishing progress made by machine learning (ML) techniques has been driven by real-world problems rather than by sudden major breakthroughs in algorithmic, according to Michael I. Jordan, a leading computer scientist and a professor at the University of California, Berkeley. “A useful perspective on machine learning is to view it as an emerging real-world engineering discipline,” he said, in a discussion led by Rüdiger Urbanke, professor at EPFL and GESDA Academic Moderator.

For Michael I. Jordan, it is crucial to make sure that ML systems deliver what they are supposed to do without generating new problems. This will require understanding the incentives that arise once algorithms are deployed and considering the processes needed to build trust, he added. For the computer scientists, the field of artificial intelligence needs to be embedded in the broader disciplines which can deliver such insights, such as economics, health, game theory and social sciences. “The goal in ML is not to build systems that mimic humans but rather make real-world systems which work for society,” he said.

How can we trust the machines?

Key issues addressed by the experts reliability, interpretability and transparency. Machine learning algorithms are often seen as black boxes which generate answers to certain input without providing any explanation as to how they reached their outcome. This raises the issue of when – or if – we can trust the analyzes provided by AI systems. The expanding field of “reliable AI” – which develops strategies and techniques to make sure that algorithms work the way they are supposed to – can leverage the experience of the traditional computer verification community, told Jeannette Wing, Director of the Data Science Institute at Columbia University and former Vice President of Microsoft Research. “But artificial intelligence brings entirely new aspects to this issue, such as robustness, interpretability and fairness,” she said. Indeed, it has been increasingly recognized that ML algorithms are not neutral but that they can reproduce the bias existing in the data on which they are trained, thus reinforcing potentially inequalities in society. This is a huge issue when considering the use of artificial intelligence in judiciary or policy decisions.

Pushmeet Kohli, Head of AI for Science at Google DeepMind underlined that we usually make a holistic evaluation of the confidence we have in a system taking a decision or making a recommendation, be it an algorithm, an administrative unit or a person. “Different applications of ML systems will require different levels of verification“, he said. “In some cases, making sure the test data is unbiased and appropriate might suffice; in other cases you will have to make sure that generalizing the system to your own particular case is indeed justified.”

The three experts stressed that machine learning is essentially a programming technique which is successful at specific tasks, mainly pattern recognition. AI systems will deploy their full impact when they are able to provide decision making capabilities that are reliable and trustworthy. For Michael I. Jordan, we are still far from this point, in particular because decisions are often taken in an iterative fashion. For instance, a patient consulting with a doctor usually provides additional information on the spot, information that would not have been contained in the data used to train a ML system. “I believe that most decisions are taken this way, and that decisions interact with each other,” he added. “I view these interactions like a market where you have an exchange of ideas, signals about trust and feedback loops. That’s why I think that computer science is not enough to design robust systems: the field will also need insights from social sciences.”

Understanding artificial intelligence is crucial

For Jeannette Wing, “causal reasoning will be the next big thing”, namely the ability to answer “what if” questions. Such technique can help prove causation (“A leads to B”) rather than mere correlations (“A and B arise together”), which is essential in medical applications and policy decisions. Machine learning systems will be able to gain more knowledge if they can mix different kinds of data, added Pushmeet Kohli. Metadata – the information describing the data itself – should try to encapsulate experience, an essential ingredient to draw new insights.

When asked about the holy grail of ML in the next ten years, Michael I. Jordan pointed out to the ability of algorithms to include real-world abstractions and common sense. To date, the most advanced models can process huge amount of data but are not able to extract any meaning from it, in ways humans can do.

While it is often hoped that artificial intelligence could contribute to accelerate scientific discoveries, “the formulating of new fundamental principles will need human scientists,” said Michael I. Jordan. “However, scientific laws must be adapted to concrete applications in order to be of practical use. This is where data is useful and where machine learning techniques could contribute.” For Pushmeet Kohli, the question is not whether computers or machine learning algorithms will be able to discover new mathematical laws of new physics, but rather if humans will be able to understand those concepts. Finally, for Jeannette Wing, algorithms might be able to find new abstractions – useful encapsulations of knowledge which can be further processed –, but it remains unclear whether humans will be able to understand them: “If we humans still want to have a role to play on the planet, we will have to be able to interpret what algorithms say and why they say it.”

(Text by Daniel Saraga for GESDA)